The Legacy

In our digital-first generation, cloud computing has increased speed-to-market, event streaming has powered real-time processing, and AI has enabled hyper-personalized features. While these transformative forces have benefited users across all segments, they have also unveiled a new challenge for financial institutions. They now must negotiate with the constraints of the existing legacy systems and seamlessly integrate these new technologies with little to no disruption to users.

Enter COBOL, designed in 1959 – predating Apollo 11, the Vietnam War, and the birth of the internet. For most consumers, every Amazon Prime impulse purchase, ATM run, and credit card point earned on a travel card is processed by COBOL on mainframes. In core platforms, COBOL has been lauded for its reliability and stability, attributes that are key to sustaining the financial sector.

The mainframe systems that still support financial institutions around the world today were built decades ago on COBOL and designed for longevity. These systems have accomplished their original goal for all intents and purposes. But the question arises: will COBOL mainframes continue to stand the test of time?

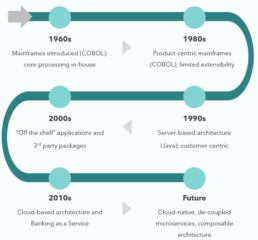

Exhibit 1. Evolution of Core Systems (1)(2)(3)

Pressure to Evolve

Clash with Flexibility

The COBOL-run mainframes were created in a tightly rigid, monolithic architecture. They are hierarchical and parameter-driven (if this, then that) with very little room for flexibility, integration, or customization. As organizations have become much larger through both M&A and customer growth over the decades, these legacy technologies are now supporting much more complexity than they were designed to, and it’s become a risky solution in the modern age.4

Personnel Challenges

On top of the inflexibility of these legacy systems, financial institutions are also encountering personnel challenges. Engineers today are not trained on COBOL – higher education institutions have, rightfully so, skewed towards teaching modern computer languages. As such, the talent pool of experts familiar with these aging systems is shrinking as COBOL professionals are already beyond their retirement age. Given the skill gap, there aren’t enough COBOL developers to fill the demand and maintain these mainframe systems.(4)

Mainframe Expenses

Due to the age, lack of innovation, and sheer size of mainframes, they’ve become increasingly expensive to run. Banks are finding that they need to retain whole departments of COBOL engineers on payroll, for the sole, time-consuming purpose of managing core processing.

Demand for Digital-First

The imperative for platform modernization has never been more pressing. Embracing cloud computing, microservices architecture, event streaming, APIs, and now artificial intelligence, delivery isn’t merely about staying relevant; it’s about gaining the needed efficiency, agility, and innovation.

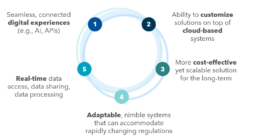

Exhibit 2. Consumer Demands (5)

Transformed Strategy: Today VS Tomorrow

As the aging mainframe and COBOL fall out of favor, they invite opportunities to not only upgrade but also future-proof:

Today Batch Processing, Tomorrow Event Streaming: Data serves as the cornerstone in driving both monetization and strategic direction. In today’s landscape, organizations process high-volume data primarily through batch processing or event streaming. The comparison between batch processing and event streaming is akin to choosing between a horse-drawn carriage and a high-speed train. Today, event streaming is vital to the day-to-day operations in industries that are as data-sensitive and highly regulated as financial services:

- Real-Time: Allows data to be collected and processed in real-time, reducing latency that would be observed with batch processing

- Scalability: Equips organizations with the ability to handle large volumes of data, scaling to accommodate workload

Dynamic: Enables data to be processed and analyzed as individual events, allowing for faster insights and decision-making

Exhibit 3. Batch Processing vs Event Streaming

Today Mainframe, Tomorrow Microservices

While both have their strengths, microservices often offer significant advantages over mainframes in terms of flexibility, agility, and scalability. Mainframes can lead to issues such as bottlenecks, limited scalability, and high maintenance costs. Additionally, making changes or updates to monolithic applications can be challenging and risky, as any modification may require extensive testing and downtime given that each piece of the mainframe is tediously entangled with a multitude of other pieces.

On the other hand, microservices architecture breaks down applications into smaller, independently deployable services that communicate with each other over a network. This decentralized approach allows for greater flexibility, as each service can be developed, deployed, and scaled independently.

Microservices also promote faster development cycles and easier maintenance, as updates or changes to one service do not necessarily impact the rest:

- Speed-to-Market: Promote faster development cycles, as updates or changes to one service do not necessarily impact the entire system

- Continuous Integration & Continuous Deployment (CI/CD): Allow for code changes to be made frequently and reliably

- Resiliency: Offer better fault isolation, resilience, and scalability, as individual services can be scaled horizontally to handle increased load

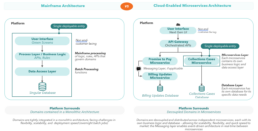

Exhibit 4. Mainframe vs. Microservices

Today Point-to-Point APIs, Tomorrow Domain-Centered Orchestrated APIs

As an example, APIs are vital to the core ecosystem of issuers, acquirers, networks, and cardholders in the payments ecosystem. In a traditional setup, APIs function as individual units designed for a specific task. However, with their individuality comes limitations, as they often require coordination with numerous other point-to-point APIs to execute tasks efficiently. As the library of APIs expands, managing these individual units becomes increasingly burdensome and complex.

Envision the point-to-point API as a lone musician and the domain-centered orchestrated API is a full-fledged orchestra. When working with point-to-point APIs, users act as conductors, meticulously selecting and coordinating which APIs to engage to produce a grand symphony that plays in perfect harmony.

Yet, as the number of point-to-point APIs grows, this coordination process becomes increasingly complex.

On the other hand, picture the domain-centered orchestrated API, where the orchestra is pre-assembled, and each musician seamlessly plays their part in the symphony with finesse. Here, the conductor’s task is simplified, as the orchestrated APIs are inherently aligned with the overarching business goal.

Exhibit 5. Point-to-Point APIs vs Orchestrated APIs

In essence, while point-to-point APIs serve their purpose in isolated contexts, domain-centered orchestrated APIs are the superior choice. They offer:

- Interoperability: Seamless integration between various applications and systems within the core ecosystem, ensuring smooth communication and data exchange

- Alignment with User Journeys: Designed to align with business objectives, providing a more streamlined experience for both end-users and developers

- Simplified Management: Simplify the management process by pre-assembling the necessary components and aligning them with overarching business goals, reducing complexity

Today On-Prem, Tomorrow Cloud

In traditional setups, mainframes are housed on-prem. On top of hosting the systems, the organization shoulders the burden of upfront installation, ongoing maintenance, and security of the infrastructure – a trifecta of responsibilities that often come with a hefty price tag. While resilient and reliable, such a setup lacks the needed flexibility and scalability. Furthermore, the 220 billion lines of COBOL code make it difficult to navigate the mainframe, where each piece is tightly coupled with another, leaving a tangled web of intricacies.(6)

Though commercial cloud computing has been around for two decades, many financial institutions have remained tethered to their heritage systems and have been slow to fully embrace the cloud’s potential.7 This reluctance has resulted in high ongoing operating costs, gaps in user experiences, and a stifling of innovative endeavors.

Cloud-native platforms allow innovative products regardless of network, geography, and currency. It offers a golden opportunity for efficiency, adaptability and scalability.

Artificial Intelligence

Industry leaders, such as Meta and Amazon, have all invested in AI to depose yesterday’s business models. In October 2023, Sopra Banking Software launched its AI-enabled, cloud-native core platform.(8) Microsoft’s Copilot for Finance, promises a significant leap in workplace automation. Their AI suite has also been adopted by prominent financial institutions like Visa and Northern Trust.(9)

Rising fintechs have also made significant strides in building out their AI strategies. After a month-long trial period in January 2024, Klarna announced that its investment into an AI assistant not only does work equivalent to 700 full-time agents but also is predicted to generate $40M in profit for 2024.(10) In February 2024, PayPal Ventures announced that they were co-leading a funding round to invest in Rasa, a GenAI startup.(11)

Fit for Purpose Implementation

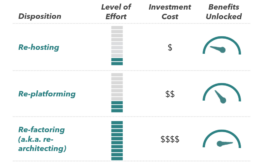

Based on each institution’s competitive drive, risk appetite, and ability to invest, they must choose the right modernization option. Unsurprisingly, the most impactful method also poses the greatest risk, the highest level of effort, and largest investment.

In an economy that is motivated by cutting costs and boosting shareholder returns in the short run, this may seem like a conundrum. On the opposite end of this spectrum, however, the most conservative option may fail to deliver impactful benefits that move the needle in the long run.

Re-Hosting

The simplest and cheapest option, re-hosting is best associated with the “lift and shift” methodology – converting the legacy system (and its data) from the on-premise mainframe into the cloud.

This method is the most straightforward way to move from the mainframe to the cloud, but that’s the extent of the effort. No changes are made to core functionality, rather the legacy applications are now hosted in the cloud.12 From an evolution perspective, this method only scratches the surface and fails to meaningfully address consumer demands.

Re-Platforming

In a slightly more advanced approach, re-platforming goes one step further after the mainframe is re-hosted into the cloud. Once core functionality and data are reinstalled in the cloud, small enhancements are made to legacy code (as is possible and feasible) to improve functionality.13 Restricted by the extent that legacy programming can be improved, rather than rewritten, benefits of re-platforming are limited.

Re-Factoring

For the revolutionaries who dare to embrace the future, re-factoring provides the most innovative effort with massive potential to unlock benefits and capture value. Requiring a full rewrite of legacy code, this approach means going “all in” on modernization.

Exhibit 6.Disposition Options (12) (13)

Going all in

If the desired target state includes capturing all the long-term benefits of AI/ML, microservices, experience APIs, event streaming, real-time processing, and more, then re-factoring is a clear choice. It’s a complex undertaking that requires a full rewrite and re-architecture of legacy mainframe code into cloud-based microservices.(14)

Consider the level of effort and precision it would take to rebuild a Ferrari, piece by piece, while it’s traveling 100 mph down the highway. Assuming the organization doesn’t shut off its mainframe for several years while the re-factoring effort takes place, that’s the level of precision required for this method. As each domain is rewritten into microservices, the rest of the legacy mainframe must continue running – and be fully integrated with the newly composed microservices.

If done successfully, the payoff can be substantial. The new cloud-native microservices architecture is scalable, nimble to change, composable for new capabilities and integrations, and real-time. Batch processing can be retired, and new technology releases can be achieved through agile methodology, enabling rapid speed-to-market. Consumer demands for customizable AI/ML innovation, API experiences, event streaming, and integrated surrounding solutions can all be met. To truly maximize the benefits of modernizing, consider a complete mainframe overhaul through re-factoring.

Exhibit 7. Re-Factoring into Microservices

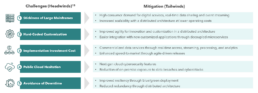

Winds of Change

Financial institutions are arguably more resistant to change than other organizations, as they are risk-averse and fear the consequences of disrupting their existing solutions. Fortunately, tailwinds are gaining strength in propelling demand towards a modernized digital-first state. Leadership should be prepared to address these concerns by highlighting the opportunities and benefits that can be unlocked for the long-term.

Exhibit 8. Strong Tailwinds Mitigate the Headwinds

In Conclusion

The mainframe-based environment is due for an upgrade at every financial institution. Whether you re-host, re-platform, or fully re-factor these mainframes, adopting a modern strategy is critical to remaining competitive. While meeting consumer demands for real-time capabilities, intuitive user interfaces, enhanced customization, and the ability to integrate with new applications, financial institutions will also be able to bolster their bottom line.

From rationalizing and sunsetting physical data centers to re-deploying infrastructure resources towards the development of new products, financial institutions are in a prime position to reduce legacy costs. In addition, new digital functionality unlocks incremental revenue streams from event streaming, experience APIs, and real-time data and analytics, to name a few.

Transformation of this magnitude is no small feat, but one that promises to shape the financial sector in the years ahead.

__________________________________________________________________________________________________________

- Fintech in Depth: Core Banking Systems Primer (2021)

- Five Degrees: The Evolution of Core Banking Systems (2022)

- The Payments Crossroad: April 2023 Recap – The Evolution of Core Banking Systems

- MIT Technology Review: Seeking a Successful Path to Core Modernization (2023)

- Hitachi Solutions: Core Banking Modernization: The Time is Now

- Reuters: COBOL Blues

- Cyber Magazine: The History of Cloud Computing (2021)

- PYMTS: Sopra Banking Software Launches AI-Enabled Core Banking System (2023)

- Microsoft press release (2024)

- PayPal Newsroom: PayPal Ventures Co-Leads Rasa’s $30 Million Series C Funding Round (2024)

- FIS Global Payments Report 2023

- FIS Global Payments Report 2023

- Hitachi Solutions: Core Banking Modernization: The Time is Now

- Deloitte: Cloud-Based Core Banking (2019)

- Deloitte: Cloud-Based Core Banking (2019)

- McKinsey: Core Systems Strategy for Banks (2020)