The Accelerated Proliferation

The recent surge in interest for Generative AI has turbo-charged an AI global market that is set to skyrocket from $847 million in 2022 to $9,475 million by 20321. The impact of this surge is expected to be felt across the board with several trends already beginning to take shape:

1.AI can impact up to 90% of working hours at FI’s with automation and minimizing of repetitive tasks1

2. By 2028, bank employees using AI for daily tasks will be 30% more productive1

3. 52% of FIs plan to hire generative AI specific staff this year2

4. Roughly 91% of FIs are either assessing AI technologies or have already integrated them into their operations3

5. Organizations that have already implemented AI have noticed a 43% improvement in operational efficiency and a 42% increase in competitive advantage3

6. 82% of financial institutions that have implemented AI into their operations have noticed a cost reduction through the use of AI and 97% of these firms plan to increase their AI investments(3).

Exhibit 1. AI Use Cases in Financial Services

A Work In-Progress Regulatory Framework

As AI becomes increasingly central to value creation, it also presents some concerns. Instances of AI exhibiting problematic behavior have become more frequent, raising apprehensions about fairness, privacy, accuracy, and security. These capabilities and risks of AI-powered systems have been recently magnified by the popular use of GenAI.

Business leaders looking for AI governance find a hodgepodge of policies and procedures, with little explanation of how they function cohesively and/or which regulations are pertinent to them. Over 68% of FI leaders noted that fear of the black box nature of AI and the increased risk of data breaches held back their implementation of AI1. Consequently, there has been a surge in AI policies in the last couple of years:

Exhibit 2. AI Regulation(2)

Risks to be Mitigated

The biggest concerns with AI are all related to the core pillars that financial institutions are built on such as accuracy, security, simplicity, and privacy1,2. Top-of-mind issues include:

1.Cybersecurity vulnerabilities created by dependence on AI

2. Incorrect and inaccurate strategic decision-making informed by AI recommendations

3. Regulatory noncompliance and ethical risks of Al

4. Erosion of customer trust via AI failures

5. Legal responsibility for decisions and recommendations made by Al

6. Failure of Al-driven systems in mission-critical/life-or-death scenarios

7. Discrimination enabled by AI via algorithmic decisions that stem from inherent biases in training data that mirror societal inequalities

8. Lack of transparency in the decision-making processes of AI tools

9. Job displacement concerns triggered by AI advancements and the need to upskill and reskill to meet evolving workforce demands.

Exhibit 3. AI Related Risks

Business Operations Challenges

1.Regulatory Compliance

a) Compliance Awareness Gap: AI teams frequently don’t fully comprehend data protection and privacy laws, such as the GDPR, CCPA, ECOA, and EEOA:

– In the ongoing lawsuit of Open AI and The Times1, enterprises face more legal and regulatory risks due to unclear legal landscape around developing technologies such as Gen AI

b) Monetization Risks: In monetizing data and insights produced by AI without a thorough compliance review, companies run the risk of legal action and damage to the brand:

-Use of GenAI may subject firms to private law requirements in regulated consumer-facing communications such as consumer information notices1

c) Insufficient Governance and Policy: Since use of AI/ML models is still nascent at several firms, they may not have the necessary guardrails and governance frameworks:

-Fewer than 40% of firms have “an agreed definition of AI/ML” while only 10% of firms have a separate AI/ML policy in place(2).

2. Mis-alignment with Organizational Objectives

a) Mis-alignment with Strategic Vision: A clear AI strategy that is understood by the entire organization is a differentiator. Implementing AI without considering the implications to an organization’s competitive advantage can be a long-term hindrance:

-Klarna, the Buy-Now-Pay-Later pioneer teamed up with OpenAI to announce that its chatbot does equivalent work to that of 700 full-time employees, handling inquiries for 150 million clients3. While this approach may be successful for Klarna, if your competitive advantage is your client’s ability to speak to a representative for personalized assistance, then automated AI solutions may alienate loyal customers

-85% of AI and machine learning projects fail to deliver on targeted business objectives, and only 53% of projects make it from prototypes to production4

b) Overinvestment without ROI: FIs may invest significant resources in AI projects without accurately assessing or realizing their potential ROI. This can lead to overinvestment in AI projects that fail to deliver on anticipated financial benefits, resulting in lost opportunity.

-Today 72% of organizations that have made investments in AI and have not been able to generate measurable business outcomes1. FIs must evaluate high-confidence business cases and have the appropriate benefits realization program in place to benefit from their AI investments.

3. Bias and Reliability in AI Decision Making

a) Built-in Bias and Lack of Human Controls: AI Bias can affect multiple parts of FIs such as lending practices and investment strategies:

-AI bias in credit scoring can lead to incorrect denial of credit or less favorable terms for certain demographics due to biased historical training data

-Loan approval algorithms used by fintech companies have been found to exhibit racial bias with Black and Latino applicants being approved at lower rates compared to white applicants, even after controlling for factors like credit score or income2

b) High Reliance and Lack of Originality: Placing too much reliance on the tool without sufficient human oversight can result in poor decision making, potentially resulting in a loss of customers and regulatory fines. This happens due to a reliance on the data sets that are used to train AI models, some of which may contain private and copyrighted content creating ownership and licensing issues with the data that is being used.

4. Data Usability & Amidst AI Misinformation

a) Unstructured and Low-Quality Data Inputs: When used as input for AI models, low quality data will produce low quality output:

-Today ~80% of banking data is unstructured3, creating elevated risk of error when utilizing the data for AI use cases

b) Explainability and “Black Box” Solutions: A considerable amount of AI and Gen AI solutions face “explainability challenges” that make it difficult to trust the reliability of AI models when assessing the accuracy and potential bias of AI outputs:

-70% of firms noted that limited traceability for sources of LLMs discouraged them from using GenAI

-68% said that fear of the black box nature of the technology and increased risk of data breaches held back their use of GenAI4

c) Learning Limitations: AI, unlike humans, lacks the nuanced judgment and contextual understanding necessary for the diverse environments in which they operate. The effectiveness of an AI or ML system typically hinges on the quality of data used for training and the breadth of scenarios considered during training:

-The absence of contextual understanding and limited judgment capabilities are important factors to consider when conducting risk-based assessments and strategic deployment evaluations.

Infiltration Risks

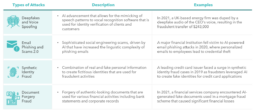

1.AI-Generated Attacks and Fraud

In recent years, FIs have faced a growing challenge in combating AI fraud. Scammers have improved their capabilities and are shifting towards more sophisticated forms of fraud that FIs are not currently equipped to detect and address. The risk of not being prepared to combat these risks creates an inherent systemic risk to our financial system if FIs are not prepared. Security and risk officers at market leading lending institutions agreed across the board that synthetic fraud increased over the previous 24 months and 87% of institutions admitted they had extended credit to synthetic customers(1).

Exhibit 4. Types of AI/ML Generated Fraud

2. AI Model Attacks

Akin to any technology, AI/ML models are not immune to adversarial attacks from malicious sources. An astonishing 90% of organizations are not ready to defend themselves against adversarial machine learning attacks. A large majority of organizations don’t have the tools needed to secure their AI/ML systems(1).

For example, in a membership interference attack, an attacker could potentially determine if a particular record or a set of records exist in a training data set and determine if that is part of the data set used to train the AI system(2). Researchers at California Berkley were able to interfere with a data set that was being used to train a healthcare AI/ML model, identified personal data that was being used to train the model, infiltrated the model and extracted personal patient data . The test case scenario raised deep concerns around the security/privacy of data sets that are used to train models. In another instance in 2020, researchers at the University of California, Berkeley, discovered vulnerabilities in facial recognition systems that allowed attackers to perform model inversion attacks and reconstruct images of individuals from the facial recognition model’s training dataset, compromising user privacy and security(3).

Exhibit 5. AI/ML Model Attacks (4)

Building A Future Proof AI Strategy

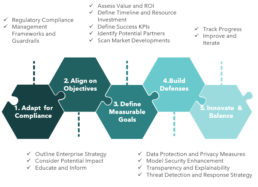

To appropriately address the challenges during AI-enabling any business, FIs need to develop a tailored strategy:

Exhibit 6. AI Strategy Optimization

1. Adapt for Compliance

Organizations should analyze regulatory and technological constraints like ESG factors, brand reputation, compliance, and data management. Ensuring that all these factors are planned for accordingly avoids regulatory scrutiny and potential reputational damages.

a) Analyze Constraints: Analyze areas of potential business inhibitors: ESG, brand values, investor relations, regulatory restrictions, and critical business partnerships

b) Develop Risk Management Framework: Establish a robust risk management framework tailored for AI technologies to ensure comprehensive governance for the use of AI. This includes validation and verification of models, ongoing monitoring, and response plans

c) Ensure Data Compliance: Comply with AI data usage regulations and ensure that available data is correctly collected/structured and that the information within enables machine learning models to function per business goals set out in the design phase of the AI program.

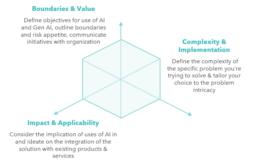

2. Align on Objectives

Align AI implementation to organizational goals. When an organization’s CEOs participate in AI initiative ideation, firms are found to realize 58% more business benefits than firms whose CEOs are uninvolved1. To succeed in implementing an AI solution aligned with the broader firm vision, executives should take a comprehensive approach:

Exhibit 7. Building on AI Strategy & Vision

3. Define Measurable Goals

Successful implementation of AI initiatives is not only dependent on technological ability but also on a clear understanding of the value they aim to deliver for an organization. Therefore, defining and quantifying the business value of AI initiatives is paramount to their success.

a) Identify Key Focus Areas: Assess all business processes, from customer service and marketing to operations and finance, to pinpoint where AI can drive meaningful value and impact

b) Define Timeline and Investment Required: Establish timelines for project implementation that account for factors such as data availability and infrastructure readiness

c) Establish Realistic KPIs: KPIs should align with overarching business objectives and may include metrics such as cost savings achieved, customer satisfaction scores, or operational efficiency improvement

d) Assess Value and ROI: Quantify and model expected benefits from cost savings, revenue growth, and competitive advantage perspectives.

Exhibit 8. MasterCard AI Principles & Scope (1)

4. Build Defenses

a) Data Protection and Privacy Measures:

Secure data handling methods, including encryption and access controls. The global average cost of a data breach in 2023 was US$ 4.45 million, a 15% increase over 3 years(4). Implementing secure data handling techniques and encryption can mitigate the risk of data breaches associated with AI implementation.

b) Model Security Enhancements:

With the introduction of regulations like GDPR and CCPA, organizations face significant penalties for mishandling customer data. For example, GDPR allows fines of up to €20 million or 4% of annual global turnover, whichever is higher, for non-compliance(5). Implement adversarial training to bolster model robustness and continuously monitor models for anomalies and deviations from expected behavior. Utilizing privacy-preserving techniques such as federated learning and homomorphic encryption can help firms comply with regulations and protect sensitive data.

c) Threat Detection and Response Strategies:

Employ threat intelligence platforms for early detection of ML attacks and conduct adversarial testing and red team exercises to assess model resilience. Tech companies like Google and Facebook regularly conduct adversarial testing and red team exercises to assess the resilience of their AI systems against malicious attacks. These exercises involve deliberately injecting adversarial examples or simulated attacks into AI models to evaluate their robustness and identify vulnerabilities(6).

5. Innovate & Iterate

a) Measure & Improve Continuously:

64% of businesses believe that AI will help increase their overall productivity(1). However, unless there are clearly defined KPIs the success or lack thereof for AI to deliver on its intended objectives will remain unclear. Key actions executives can take to continuously improve on their AI strategy are:

-Conduct periodic assessments of AI initiatives to evaluate their effectiveness, ROI, and alignment with organizational goals

-Gather feedback from stakeholders, end-users, and subject matter experts to identify areas for improvement

b) Keep A Pulse On Market Developments:

Companies can stay ahead of competition by monitoring advancements in AI technologies, industry best practices, and regulatory changes to ensure the AI strategy remains relevant and competitive. Additionally, companies can engage with industry forums, attend conferences, and participate in knowledge-sharing networks to stay informed:

-37% of companies are currently developing an AI strategy(2)

-28% of companies already have a holistic AI strategy in place(2)

-25% of companies have AI strategies that are focused on a specific set of use cases(2)

In Closing...

The current technological advancements in AI present an abundance of opportunities. However, in the rush to implement AI-driven businesses, FIS must remain vigilant of potential risks and disruptions to broader business objectives. Key risks to keep top-of-mind are internal risks from modernizing technologies, threats from external infiltration, technological advancements, and malicious actors.

FIS must develop action and remediation plans to defend their institutional integrity and protect clients. The expertise and guidance of specialists can help develop de-risked AI strategies and solutions and prevent opportunities from becoming failed investments and organizational liabilities. Through comprehensive AI strategy development that weighs both internal and external risks, FIs can continue to stay ahead of the curve in today’s evolving world. AI, with its ever-growing potential still poses its risks, are you prepared to deal with it?

__________________________________________________________________________________________________________

1.Kanerika – “Generative AI In Financial Services And Banking: Top 10 Use Cases” (2023)

2.Forbes – “The AI-Bias Problem And How Fintechs Should Be Fighting It” (2021)

3.NVIDIA – “AI Takes Center Stage” (2024)

________________________________________

1.BCG – “A Generative AI Roadmap for Financial Institutions” (2023)

2.Medium – “A history of AI regulations” (2023)

________________________________________

1.McKinsey – “The state of AI in 2023: Generative AI’s breakout year” (2023)

2.Forbes – “The Benefits And Risks of AI In Financial Services” (2023)

________________________________________

1.New York Times – “The Times Sues OpenAI and Microsoft Over A.I. Use of Copyrighted Work” (2023)

2.Wharton – “How Can Financial Institutions Prepare for AI Risks?” (2021)

3.Gartner – “Klarna froze hiring because of AI” (2024)

4.InfoWorld – “Why AI investments fail to deliver” (2021)

________________________________________

1.Gartner – “Gartner Says Most Finance Organizations Lag Other Functions in AI Implementation” (2023)

2.NBER – “Consumer-Lending Discrimination in the FinTech Era” (2021)

3.CIOPages – “Solving Unstructured Data Challenges with AI and ML” (2023)

4.BCG – “A Generative AI Roadmap for Financial Institutions” (2023)

________________________________________

1.The Financial Brand – “AI Arms Race: Banks and Fraudsters Battle for the Upper Hand” (2024)

________________________________________

1.Microsoft – “Adversarial machine learning explained: How attackers disrupts AI and ML systems” (2022)

2.OWASP – “ML04: 2023 Membership Interference Attack” (2023)

3.Vice – “’Faceless Recognition System’ Can Identify You Even When You Hide Your Face” (2016)

4. Google – “Why Red Teams Play Central Role in Helping Organizations Secure AI Systems” (2023)

________________________________________

1.BCG – “A Guide to AI Governance for Business Leaders” (2023)

________________________________________

1.Mastercard – “How Mastercard is leveraging AI to revolutionize e-payment experience” (2023)

2.Brighterion – “Mastercard’s five pillar approach to strategic implementation of AI” (2020)

3.Emerj – “Artificial Intelligence at Mastercard – Current Projects and Services” (2019)

4.IBM – “Cost of a Data Breach Report 2023” (2023)

5. SetigoStore – “5 Important Data Privacy Laws: CCPA, HIPAA, GDPR, GLBA, and LGPD” (2021)

6.Google – “Google’s AI Red Team: the ethical hackers making AI safer” (2023)

________________________________________

1.Forbes – “24 Top AI Statistics and Trends In 2024” (2023)

2.IBM – “IBM Global AI Adoption Index 2022” (2022)